It is not easy to surprise Bill Gates – but the OpenAI team managed to do so. Last September, the Microsoft founder’s challenge was met: AI (Artificial Intelligence) successfully passed an advanced high school biology exam – the same exam that the most talented students take to enter American higher education.

Gates set this particular task before OpenAI, because success required not only memorization, but also critical thinking and a deep understanding of the field. An additional condition was that the system should not be specially prepared or fine-tuned for this exam. Gates anticipated that the AI experts would be occupied with the task for years – but to his astonishment, after only a few months, he was invited to the demo. At this demo, the GPT AI not only passed the exam, but also provided correct answers to 59 out of 60 questions – and even managed to answer an empathy question (“What would you say to the father of a sick child?”) better than anyone else in the room.

Two months later, OpenAI researchers released a friendly chat interface to GPT that everyone could understand – and the resulting ChatGPT reached a hundred million users in another two months, thus becoming by far the fastest-spreading technology of all time.

What is GPT anyway?

GPT stands for Generative Pre-trained Transformer. It is a pre-trained (thus not having up-to-date knowledge), generative (i.e. capable of generating and creating), large language model.

GPT has a surprisingly simple structure. Like most neural networks, GPT uses only two of the four basic operations (multiplication, addition) and a non-linear function (e.g. tangent). But even with this very limited set of operations, it is already able to continue any given text with a few letters, much like a phone keyboard statistically predicts the next word based on the previous 1-2 words.

The differentiating factor is quantity. First, GPT can continue several thousand words of text (tens of thousands in the case of the latest models), equivalent to a whopping 50-60 typed pages.

Second, the corpus of text on which the AI trained, costing hundreds of millions of dollars is vast. Although the information is contradictory, it is certain that at least 300 billion words of text were used for the training.

Third, the model is enormous: the original “brain” of ChatGPT, GPT3, has 170 billion parameters, which means that there are that many numbers in the formula consisting of just additions, multiplications and some non-linear functions (there is no such information about GPT4). The knowledge the model was fed during its training is stored in these numbers – even though its developers themselves don’t fully understand how.

If we “input” a text into this inconceivably large mathematical formula and perform 170 billion operations, we get a couple of we get a couple of letters as a result. These letters are the most probable continuation of the text. By feeding the text expanded text back into the system, we can get more and more letters – until an answer is formed. This process is evident in how ChatGPT slowly “types” the answers to our questions.

Quantity and Quality

In such orders of magnitude, quantity translates into quality. The AI, tuned to a significant part of humanity’s written knowledge, can answer the question in almost any field – while still working purely on a probabilistic basis. We can query about who the president of the USA is; what planets are in the solar system; request grammar correction; summarize a long academic paper in a way fifth-graders; write a poem in the style of Shakespeare about the iPhone; or create a relatively simple computer program.

What is even more fascinating – and powerful – is that the AI can even combine these areas. We can ask it to write a phone app that shows the planets on the screen and then tests children’s knowledge about them about them. In this case, the AI can even take advantage of its knowledge on cognitive sciences and pedagogy to adopt its teaching method to individual student’s strengths and weaknesses.

The AI can recognize these interdisciplinary connections, enabling it to solve tasks that humans cannot or can only do with difficulty. As a programmer, I’ve asked ChatGPT (or one of its relatives) countless times to identify what could be wrong with my code, or to write a subtask in a field I’m not familiar with.

I’m not alone in this. Last year’s Github Copilot and ChatGPT (as well as other AIs) make software developers 55% more productive, according to surveys. It is said that 41% of the code uploaded to Github (the most important repository for open source programs) is written by AI.

Professions in Danger

Personally, my biggest misconception about AI was that, as a software developer, I would be among the last whose job would be at risk. After all, someone has to program the AI, right?

Well… not quite. John Carmack (one of the world’s most famous programmers, known for games like Doom, Quake) said in an interview that almost any task can be automated with the existing neural network approach, where a machine can decide how correct a response is (say, on a scale of 0 to 1). If we can produce such a function (called a loss function), then it’s “just” a matter of data volume and computational capacity to train a neural network – at some level – for that task. Coding is an area where a lot of such data is available, already in convenient digital format. So, the ironic situation has arisen – we, software developers, are digging our own graves by making the fruits of our hobby / profession available to the community in the form of open-source code, open discussions, and arguments – and AI learns from this. Karma…

But back to Carmack’s statement – based on the above, it is already predictable which professions AI will radically disrupt. A recent OpenAI study (https://arxiv.org/pdf/2303.10130.pdf) suggests that tax experts, authors, web developers, and mathematicians (!) are the most exposed to change. Legal and administrative assistants, data managers, interpreters, and marketers cannot rest easy either. Poets and PR experts are in a slightly better position. The safest are those who work in the physical world instead of in the realm of bits and bytes – veterinarians, agricultural professionals, car mechanics, chefs, electricians – as well as those who work with people: nurses, kindergarten teachers, lower-grade teachers, directors, etc.

Of course, the idea that “AI will take your job” is not black and white. A friend of mine writes blog posts almost daily, and recently he has been supplementing his posts with AI-generated images. This makes his blog posts more interesting and enjoyable – but in his case, AI does not take work away from graphic designers, because he certainly would not have paid a graphic designer to create new images daily.

At the same time, the undeniable increase in productivity that AI represents even in its current form is already resulting in job losses. If one worker (plus AI) can do the work of two people, then under the rules of capitalism, one of the employees will be laid off (because demand does not double overnight). Not on a massive scale just yet, but there are already numerous such stories circulating on the internet.

What can We Expect in the Near Future?

The pace at which things change is staggering. In March, there was an announcement almost every single day – a new technology, research or approach that turned the AI world upside down (which had already been turned upside down on the day before). It’s a full-time job just to keep up with AI news.

But even if we can’t predict the big surprises (that’s why they are called surprises after all), there are a few things we can make educated guesses about.

- ChatGPT-level, open-source language models are already appearing. These remove the control of strong GPT models from the hands of tech giants. Anyone can create a language model that is tuned to their own taste, and with it they can overcome the – questionable, but at least existing – moral, ethical, or political barriers that Microsoft, OpenAI, Google, Meta and others put in front of their respective services. One can therefore create a text generator that tells them how to dispose of a dead body or manufacture political fake news ad infinitum. We can expect the “Nigerian Prince’s” spam emails to rise to a whole new level – where they will finetune their scam to our personal profile, with proper English and no spelling mistakes. You can run these models on a simple PC or even a laptop. Large open-source language models (such as Meta’s LLAMA) speed up innovation tremendously, and the trajectory points to the introduction of much more effective models that only lag behind the “big ones” by a few months in terms of quality.

- In the field of image generation, the monopoly of large companies is already over. Today anyone can fabricate pictures of celebrities or politicians in questionable situations (e.g. the Pope in a white puffer jacket or Trump running away from the police). Thus, there is no need to wait for the super-intelligent AI of sci-fi dystopias – even with currently available AI, an incredible amount of damage can be caused. Just think of the upcoming US (and worldwide) elections.

- Even speech and sound generation is getting the AI treatment. You can already create fake voices based on a few minutes (or even seconds) of sample that are getting good enough to be mistaken to recording of any actor’s or politician’s voice (did I tell you about the dangers of AI when it comes to political misinformation?). The music industry can’t rest either – AI can generate convincing (but fake) Eminem vocals as well as a backing track, based on a text prompt.

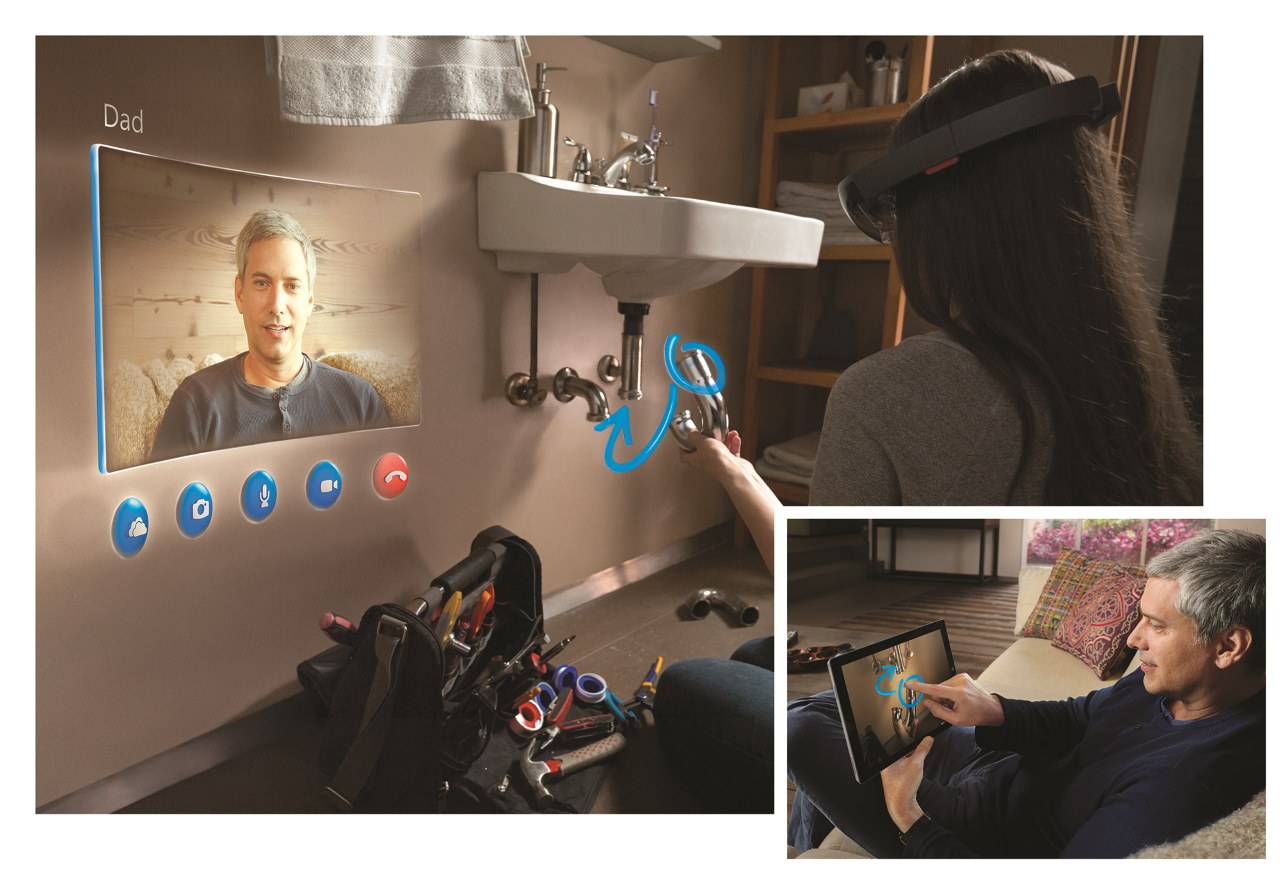

- Systems that can interpret multimodal inputs (image, sound, video in addition to text) are coming. The novelty is that GPT4 can work with text and image input at the same time, in the same model. Combined with its vast background knowledge, it can tell us what is funny in a picture, what happens when we cut a string that holds a weight, and it can even create a working website from a sketch scribbled on a piece of paper.

- ChatGPT plugins, which can be used to connect the chatbot to external services, are already undergoing private testing. They can search the Internet, thereby providing you with up-to-date information; analyze documents and use them as a source (so we can “ask” the camera manual how to turn on manual focus); book a table for dinner; invoke Wolfram Alpha for complex calculations; read and write our emails; create and run ad-hoc Python code; or even analyze an Excel table based on a natural language request.

- Speaking of Excel – Microsoft is integrating the chat module and other AI services into all Office products. This means that we can simply ask Excel to analyze trends and draw all kinds of nice graphs. Word will be able to produce an outline on any topic, and then fill the individual headers with text. PowerPoint uses AI to create designs. And in a corporate environment, we can simply say “Summarize the information I need for my next meeting and prepare a Sales presentation tailored to the customer”. The AI will know who we are meeting with, read and summarize our correspondence over the last month, and transform our standard sales presentation based on what we discussed with the client. Google, of course, is also planning similar things – although not as deeply integrated just yet.

- While we’re talking about Google, they found themselves dealing with a textbook Innovator’s Dilemma. Internet search has already been disrupted by AI. Bing, which is considered a running joke in the search market, is making the Google bear dance. With Bing Chat, if I ask it a question, I don’t get a page full of links (and ads) – instead, the AI reads the search results and tries to give a direct answer to my question. This way, I can get to the answer much sooner, because I don’t have to go to each website and search for the relevant information in a long article. Of course, this is not good for Google, as its main revenue source are the advertisements – on its own site as well as on sites all over the web. At the same time, if Google deploys its own (much weaker) search engine AI, it also weakens its own revenue stream.

Can We Put the Genie Back in the Bottle?

A few days ago, an open letter signed by Elon Musk, Steve Wozniak, and other technology celebrities and experts was published, urging tech giants to temporarily suspend the development of language models stronger than GPT4. The reason for this is that the exponentially growing AI is getting out of our control. Despite a ton of research effort, it is admittedly difficult to understand, control, and most importantly align the AI’s “behavior” to the interests of its developers (or all of humanity).

In sci-fi literature, there are numerous cases of AI causing the end of the world, and there is no AI expert who would not be disturbed by this possibility. Although not among the signatories, OpenAI’s leader, Sam Altman, has also expressed his concern on this matter several times (interesting name by the way – if I were a robot, I would choose something similar).

Others believe that the scare tactics with AGI (Artificial General Intelligence – AI comparable to humans) and ASI (Artificial Super Intelligence – artificial intelligence far surpassing humans’) are merely PR stunts. Microsoft experts said they had discovered the initial sparks of AGI while studying GPT4, and this news has naturally made the headlines, directing attention to the company’s AI solutions.

In my opinion, today’s ChatGPT already knows more about medicine, programming, history, literature, languages, and almost any area of human existence and knowledge than 95% of people. And while it may be laughable that it cannot do math well (neither can a lot of people to be fair), or is weak in certain language games, there is no person on Earth who would know so much about so many things and be able to connect their knowledge to some extent. What is this if not general intelligence – even if completely alien?

If AI really brings the level of productivity increase that, for example, Microsoft hopes for, then this genie cannot be put back in the bottle, simply because of the laws of economy. Those who miss out will fall behind. If a key player stops development for six months, others will gain an insurmountable advantage. Or, according to cynics, some of the signatories of the open letter will catch up with the leaders… 🙂

But even if research could be legally curbed in the United States, other countries would still push forward. Such as China – where there are no fears and moral considerations when it comes to economic, political, or world power, and who have long been using AI in various areas of life, often evoking Orwell’s 1984.

This genie cannot be put back in the bottle. Like it or not, a new arms race has begun – at least economically, but possibly also in terms of world politics and world power relations.

Economic Impact

It is difficult to predict the economic impact of the ongoing AI revolution. Not just because we do not even know what groundbreaking innovations may appear as early as tomorrow. PWC calculates a somewhat unbelievable 45% overall economic growth by 2030 due to the development of products, their customization, and increased attractiveness.

If the drastically changed productivity does not come with a similarly sized increase in demand, the emergence of AI in production could result in shorter working hours or unemployment. Some experts are already talking about the need to introduce a basic income.

AI also thoroughly disrupts the startup ecosystem in tech. In several cases, I have seen that the key value proposition of a startup with millions of dollars in investment can be duplicated with a well-targeted prompt to the AI – and no technical knowledge is needed for this. Many established features or even entire products will become obsolete when we can just ask ChatGPT (or whatever form of AI is the state of the art at that moment) to simply perform the task for us. Similarly, quote a few – probably very short-lived – startups are springing up with not much more than clever prompt engineering.

Ethical Questions

Of course, AI capable of generating images, texts, videos, and music raises many ethical and legal questions. Should an artist be paid if their photo, painting or signature style was used as a basis for the AI to create the opening image of a blog post? Or a newspaper cover? And how can it be traced back if someone’s images or poems published on the internet influenced the final result by 0.2%?

How can AI be aligned to any ethical, moral, or world view? And even if we manage to apply such alignment, whose values should it conform to? There is no absolute moral or truth, especially in today’s tense world (just think of the abortion issue, the seemingly irreconcilable differences between the left and right, or religious ideals).

In Conclusion

Based on the above, we can confidently say that we are dealing with a phenomenon comparable to the great technological revolutions of history (agricultural, industrial, informational), but with a good chance of having an even greater impact than them. Unlike previous technological revolutions, the AI era is ushering in at breakneck speed, without a long transitional period. An unstoppable change of such magnitude is taking place around us that even the best experts cannot assess its impact. Only one thing is certain, the world will soon be very different.

Buckle up!

![too-much-work-not-enough-time[1].jpg](https://vbandidotnet.files.wordpress.com/2018/03/too-much-work-not-enough-time1.jpg)

![Fisher-Price-Toy-RC-Remote-Control-Thomas-The[1].jpg](https://vbandidotnet.files.wordpress.com/2018/03/fisher-price-toy-rc-remote-control-thomas-the1.jpg)

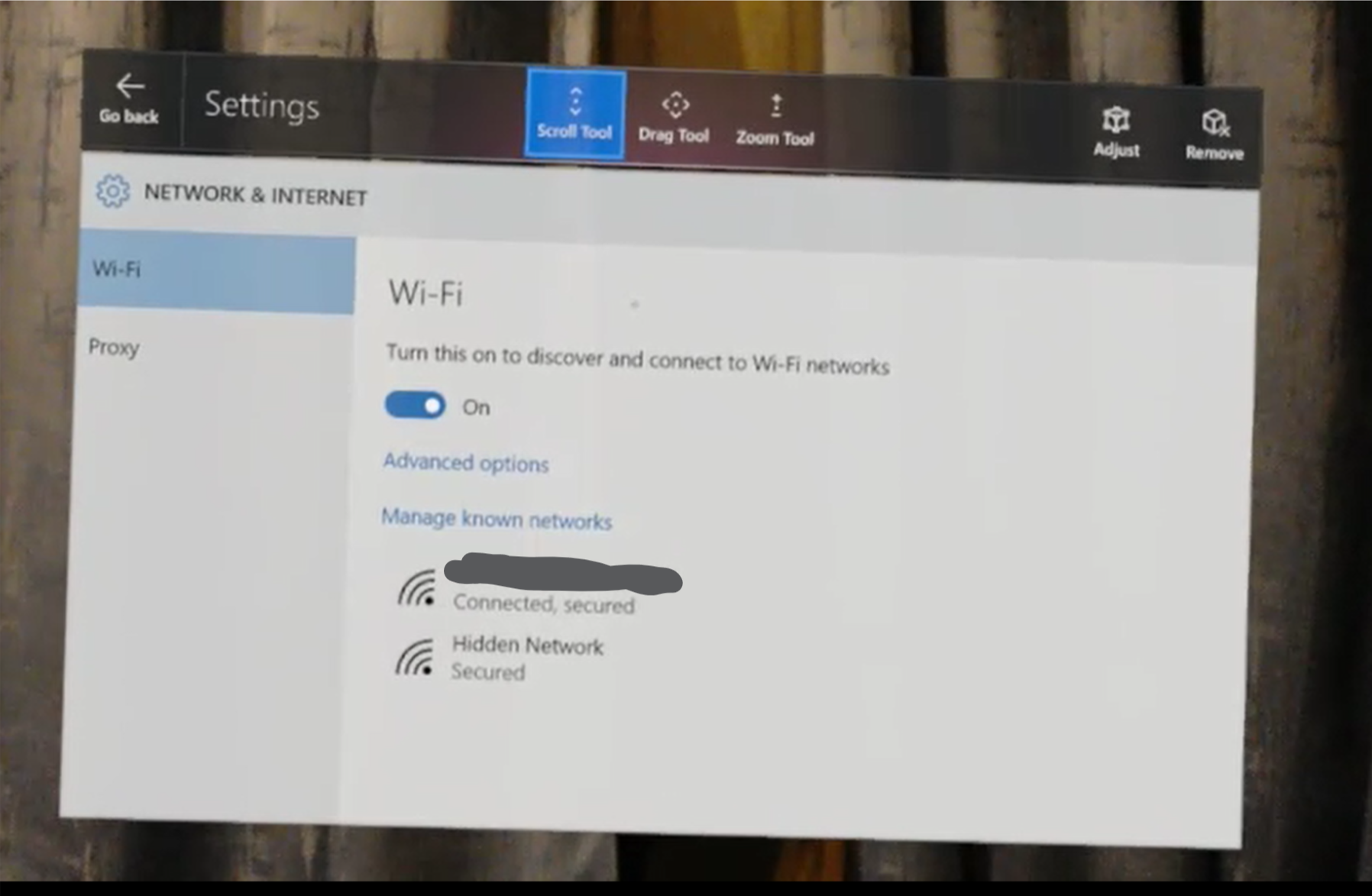

As you can see, the computer has to be connected to the Internet (and has to have a Wi-Fi adapter) for the Mobile hotspot to be enabled. If you can’t see the above warning, all is OK – turn on the “Share my Internet connection with other devices” checkbox.

As you can see, the computer has to be connected to the Internet (and has to have a Wi-Fi adapter) for the Mobile hotspot to be enabled. If you can’t see the above warning, all is OK – turn on the “Share my Internet connection with other devices” checkbox.